Hybrid AI architectures will emerge as a potent solution in this rapidly changing world of AI as a combination of the strengths between Small Language Models and Large Language Models. At a time when businesses increasingly demand scalable, flexible, yet highly accurate AI solutions, these two models will unlock more nuances in language processing and are set to drive impactful results across industries.

Hybrid models are increasingly being seen in finance, healthcare, and even customer support sectors. A survey conducted in 2023 has revealed that 58% of the organizations are experimenting with AI language models while 23% have already deployed commercial versions. This trend speaks of a rising need for AI solutions to be powerful enough to deal with both structured as well as unstructured data in an easy-to-process manner.

What are Hybrid AI Architectures?

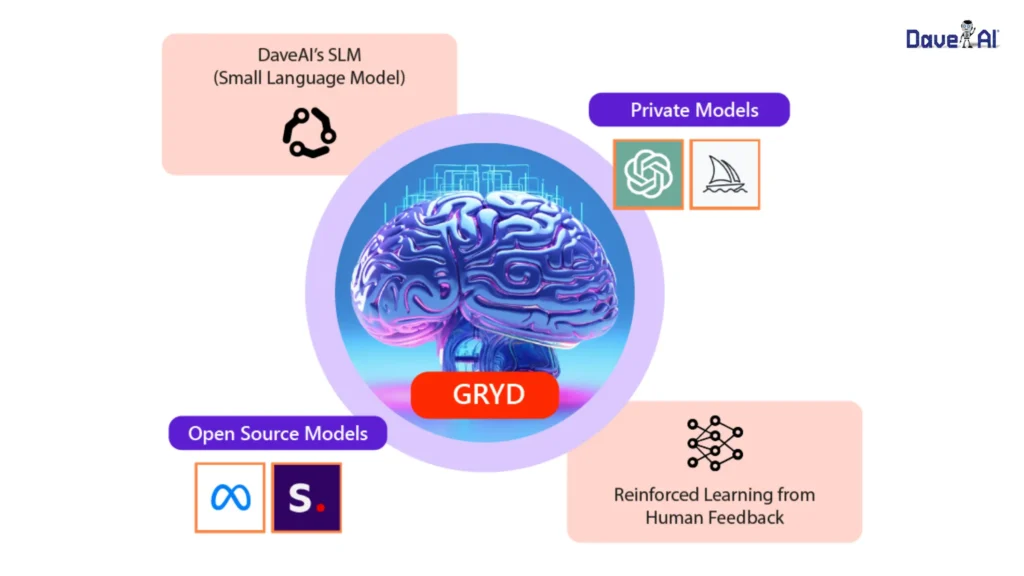

Hybrid AI architectures combine features of SLMs and LLMs to give birth to a system that effectively deals with both simple structured data and complex unstructured data in an effective manner.

Smaller models (SLMs), which often use neural networks but on a smaller scale, are excellent for simpler, resource-efficient natural language processing tasks, like keyword analysis or simple data classification. In contrast, LLMs, powered by transformers and deep learning, are very effective at text generation and question answering along with advanced comprehension.

Bringing together both resource-efficient, task-focused abilities of smaller models and rich contextual and generative capabilities of LLMs, this hybrid AI model offers superb versatility that is perfect to tackle the diverse data environments found with real-world applications like customer support and data-driven decision-making.

The Basics: Understanding SLM (Small Language Models) and LLM (Large Language Models)

Small Language Models (SLM)

Small language models use neural networks with fewer parameters than large language models, making them computationally efficient for many language tasks. They often use transformer architectures similar to those in LLMs but scaled down to prioritize speed and lower resource consumption. SLMs predict words by learning from a sizable dataset and capturing contextual nuances, though they’re optimized to work within more constrained environments.

For instance, a smaller transformer model can be applied in areas such as text classification, summarization, or predictive text where there are fewer computational resources. It even fits well into mobile applications or lightweight platforms where accuracy is to be maintained without high processing power.

Large Language Models (LLM)

The LLMs are built using enormous neural networks, mainly in transformer architectures, such as GPT-4 and BERT. Transformers work well on sequences, therefore working very well in language applications. For example, a transformer-based LLM, GPT-3 imposes 175 billion parameters on comparatively enormous datasets, thus making it proficient in creating coherent and creative responses from simple prompts.

These neural networks operate in layers, with each subsequent layer capturing increasingly abstract features of language-meaning of words and complex relationships-between them. It allows the LLMs to soar at deep learning tasks like text summarization or natural language generation with high accuracy and enhanced efficiency.

Explore the World of LLMs; Grab Our Whitepaper Today: Large Language Models 101

SLM vs. LLM

Because SLMs are less data- and computer-intensive, they offer faster results, due to their swift training and speedier inference, at a lower price for applications like grammar checking or keyword analysis. SLMs also strike a harmonious balance between resource efficiency and performance. Training SLMs is way more cost-effective because of fewer parameters and offloading the processing workload to edge devices, too, cuts infrastructure and operating expenses.

In contrast, LLMs offer a much richer contextual understanding, but their resource intensity calls for big datasets to be trained upon. The LLM needs to be trained on a vast corpora of text (considering billions of pages) to ensure accuracy, enabling it to learn grammar, conceptual relationships, and semantics using zero-shot and self-supervised learning. However, when it comes to real-world operations, both models are essential for efficiency-versus-accuracy balancing.

Benefits of Hybrid AI Architectures

Combining SLM and LLM delivers many hybrid AI benefits, rendering it an attractive choice for businesses:

Performance Boost

Integrating SLM speed with the deep knowledge of LLM will enhance the effectiveness of the AI. That simple, recurrent FAQ from the customer will be handled quickly by SLM, while LLMs will handle complex questions such as handling queries on customer services. In this balance, faster and more accurate outcomes will optimize AI to various applications.

Scalability

Hybrid AI models are very scalable for enterprise environments since it nicely rations computational resources to where they are most needed. SLMs will take care of more simpler tasks requiring fewer resources, while LLMs will deal with high-demand, data-heavy processing thus coming with the added AI scalability benefit.

Flexibility

Hybrid AI models are flexible, making it possible to balance between using SLMs with structured data, which is mostly financial transactions, and LLMs with unstructured data, usually like customer feedback. This balance allows business enterprises to accomplish various types of tasks since they will seamlessly adapt to any kinds of data in different industries.

Accuracy and Efficiency

Hybrid AI allows for accurate efficiency in using SLMs for fast, easy tasks and LLMs for deep linguistic understanding. This collaboration thus helps organizations manage standard and complex operations or transactions effectively enough to enhance AI system performance in general.

Challenges of Developing Hybrid AI Systems

Although these systems have many advantages, there still are some hybrid AI challenges:

SLM and LLM Integration Issues

Technically, the fusion between the computational logic of SLM and LLM has been a great challenge. Customizations in workflow often become immense for both to work well together.

Data Management in AI

Integration across enterprises of both structured and unstructured data is even more challenging. Additionally, proper data parsing and processing by an appropriate model adds more layers of complexity of system design and deployment.

Computational Costs

Hybrid models are typically much more resource-intensive and demand more computation power than other types of models. It’s known to strain the enterprise’s computational resources to train and run LLMs since they involve billions of parameters, which is expensive to maintain.

Maintain Model Balance

Balancing the speed of SLMs with the deep processing power of LLMs in hybrid AI proves challenging, especially as the complexity of the data and/or the demands of the tasks increases. A major issue is when to use SLMs for the most efficient simple, structured data processing versus LLMs to process the more complex, unstructured tasks.

As the system scales up, it is harder to maintain this equilibrium, especially when the unstructured data volume increases, for which the computationally more intensive LLMs are more often needed. This can increase computational overhead and slow performance.

Case Studies: Real-World Applications of Hybrid AI Architectures

Hybrid AI architectures have enormous benefits to several industry applications:

Customer Support

Hybrid AI models are utilized in building chatbots which respond to easy customer queries using SLMs and more advanced support for intricate problems is given by LLMs, thereby satisfying the customers faster and more accurately.

Financial Institutions

Hybrid models are utilized in finance to process the SLM for structured transaction data and LLM for unstructured customer feedback or fraud detection. For example, LLM is used by Bank of America to develop real-time updates as well as fraud detection. Nearly 60% of clients of Bank Of America use LLM products for guidance on insurance, investments, and retirement plans.

Retail Industry

Hybrid models give the retailers the opportunity to give personalized recommendations based on consumer behavior and preference through LLM and manage the inventory with the help of SLM, enhancing customer experience and operational efficiency. Personalized recommendations are substantially essential to 91% of customers.

Retail and ecommerce businesses also use LLM-based chatbots to quickly create engaging and informative product descriptions. It’s a vital selling point, as 76% of shoppers check product descriptions before purchasing.

Healthcare Applications

Healthcare organizations embrace hybrid AI, integrating structured patient data (SLM) and unstructured medical literature (LLM) in efforts to enhance diagnosis and treat patients. LLMs help healthcare professionals by analyzing patient data, which gives more accurate diagnosis and better treatment results. They achieve nearly 84% accuracy by observing historical data and similar cases. Hybrid systems also automate clinical documentation.

The Future of Hybrid AI Architectures: Trends to Watch

Hybrid AI architectures are expected to be more advanced because of these new future AI trends:

Hybrid AI Advancements

Researchers are seeking alternative methods by which the integration of machine learning algorithms in hybrid AI is possible, such as fine-tuning techniques, that will eventually bring forth the development of more efficient hybrid systems. These advances are primarily intended to reduce the computational overhead that occurs when training large models. Meanwhile, they can achieve high accuracy.

Smarter AI Systems

Next-generation hybrid AI models will be more intelligent and autonomous. They will be able to learn from a much broader range of sources and, on the basis of input received, provide better and more context-aware responses. For instance, As the demand for real-time analytics increases, the advancements in Real-time Data Fusion will take place and make it possible to process and analyze data as it is generated.

AI for Enterprise Solutions

Hybrid AI models are here to take over the finance, retail, and health sector industries. For example, the use of Synthetic Data (artificially generated data mimicking real-world data) will increase as organizations want to overcome data scarcity. Synthetic data generation will help train AI models better.

Such new AI innovations will push businesses towards more streamlined processes, improved customer experiences, and educated decisions based on structured and unstructured data.

Ethics in AI

As hybrid AI systems are becoming prominent, issues related to privacy, security, and responsible AI governance come to the forefront. An approach called ‘Federated Learning’ will gain momentum in future as it enables collaborative learning without compromising data privacy, allowing organizations to leverage shared insights while keeping their data secure.

Conclusion

Hybrid AI architectures would open the scope for businesses to balance the strengths of small language models (SLMs) and large language models (LLMs). Both approaches together can enable enterprise AI solutions to create more scalable, adaptable, and efficient systems to deal with structured as well as unstructured data. Whether in customer service, finance, retail, or healthcare, hybrid AI will transform industries with smarter, context-aware solutions.

These models are the future of AI, and they can create improvements for businesses in performance, scalability, and adaptability to the challenges that exist in an increasingly AI-driven world.

Shashank Mishra

Shashank is an experienced B2B SaaS writer for eCommerce, AI, productivity, and FinTech tools. His interests include reading, music, poetry, and traveling.